Automated Testing Part 5: Dependencies

In the previous article we looked at techniques for refactoring legacy code to expose individual functional pieces for unit testing. By supplying predictable test data in a correctly configured and controlled environment, your tests can prove that the code of such routines continues to operate as expected.

Whilst it is easy enough to test the inputs and outputs of individual routines, the challenge comes when you need to test routines that have dependencies on external factors. These factors might include other routines that get called along the way; data fed into or selected by the code; external data feeds; spooler output and user interfaces. Each of these provides a barrier to straightforward unit testing. Handling dependencies can be the most time consuming part of writing unit tests.

Faking It

Central to the concept of unit testing is the need to isolate the code under test, so that you know exactly what is being tested and why. In an object oriented world that is challenging enough, but on a MultiValue application composed of many interlocking legacy subroutines that can be a lot tougher to achieve. How do you test a routine that calls on other subroutines to perform important tasks — subroutines that may not yet have their own bodies of tests and whose own effects and dependencies you may not have the time to fully understand?

When faced with calls to dependent routines, the classic approach is to fake it using mocks, stubs, or other similar doubles. A fake is a routine or object that stands in place of the true routine that would normally be called by the code under test, and that can be used to produce predictable output (stub) or to verify the behaviour of the call (mock). For a good discussion of the difference between mocks, stubs, and similar artifacts visit www.martinfowler.com . As with all such resources a level of translation is required to apply the standard academic model to MultiValued applications.

Let us take a simple example. Imagine that you are testing a routine that places an order. As part of the process this may call another routine that performs a credit check on the customer, and may return one of three values: the customer has sufficient credit, the customer is on hold, or additional approval may be required before their order can be fully accepted. As a developer, you want to test that your order submission will work correctly in all three situations.

One option might be to create and utilize three different customers, each of which fulfils one of those criteria. That makes a naive sense in simple cases, but as well as the fact that the data required to force that decision may be complex and require a great deal of setting up, it also introduces another dependency. Should the rules involved also vary over time this will render your test invalid, and whilst this would show up in regression tests, it localizes the error to the wrong area of responsibility. The credit check and any data required to implement that should be the sole concern of the credit check routine and not the responsibility of the order submission test.

A key part of unit testing is to be clear about just what is being tested. The order submission calls a credit check routine to return a value, but should not itself care how that value is calculated. The job of the order submission is simply to respond to that value. This is what is meant by functional isolation in terms of unit testing.

So rather than worry about setting up all the necessary data to force the credit check to return the required value, a more practical solution would be to fake the credit check subroutine for the duration of the order submission tests. The fake subroutine sits in place of the real credit check and will present exactly the same arguments, but will return a specific value to the order submission when called under test conditions. The internal logic might even be as simple as:

Begin Case Case CustomerId = FIRST_TEST_CUSTOMER Result = CREDIT_OK Case CustomerId = SECOND_TEST_CUSTOMER Result = CREDIT_ONHOLD Case CustomerId = THIRD_TEST_CUSTOMER Result = CREDIT_AUTH End Case

You can then call the order submission passing each of these customer numbers, knowing what the routine will return. You could similarly drive the output from a file or named common block stacked with required responses from the unit test itself.

In object oriented languages, fake objects can be accessed through inheritance or interfaces. In the MultiValue world we can simulate the difference in calls by using indirect calls (CALL @name) or by overlaying the catalog pointers. Keeping a FAKE.BP file from which you can temporarily catalog a replacement routine is often the simpler solution, though you may need to be careful about how the database caches called subroutine code. You also need to ensure that tests involving fakes are suitably isolated to prevent the possibility of two developers running tests at the same time in the same account each setting up a different temporary fake for the same routine. It is one of many strong arguments in support of each developer testing in a separate account.

Not all fakes need to return data. Let us imagine that later in the process your order submission routine sends a confirmation to the customer by calling a general purpose email subroutine. Do you need to test the email functionality here? Clearly not. Once again that is not the responsibility of the code under test. What does matter, however, is that fact that the email routine gets called and that valid arguments are passed in. Mocking routines verify the interaction of the calls themselves by capturing and validating input and the number of calls made.

mock.Setup(x => x.DoSomething(It.IsAny<integer>())).Returns((int i) => i * 10);

mock.Verify(foo => foo.Execute("stuff"), Times.AtLeastOnce());

In the mvTest world the calls are still procedural and so the validation can be part of the script. Even so, a mock is presented as an object whose lifetime extends beyond the call allowing you to assert facts about the call:

Assert "Email routine was called", myEmailMock.wasCalled() AssertIs "Valid email was passed", "me@my.com",myEmailMock.GetArg(1)Data Dependencies

In a modular application or one that has been refactored to embrace single responsibility principles, using fakes can go a long way to reducing the amount of time required to identify and set up test data for your application. For other data schemas it is usual to fake out all database interactions, but with MultiValue application inevitably you will need to test the interaction with the file system.

In unit testing the data with which we work must be both predictable and reproducible. That can be difficult to achieve on development systems where any kinds of rubbish may be left behind by previous tests or generations of the system, or where data may be broken as the result of unfinished or erroneous development work.

Periodically refreshing your development systems with sanitized copies of the live data is a simple solution but from a unit testing perspective it is not the answer. If you have many developers working on different tasks, some of these may be dependent on future data changes that are still being coded by other teams. If your production data is volatile, you may find that you simply didn't have certain types of data in your database at a particular refresh point so vital test situations might get missed. And depending on where you are in the business cycle, the point at which the data refresh takes place may not be the right point for a team testing period end, specific end-of-day or intra-day operations. Having one team run an end-of-day batch may even wipe out the very data required by another team!

So here is the hard fact — whilst snapshots may be fine for user acceptance testing, you cannot depend on existing test data for unit or CI tests. A unit test should create and tear down the data it needs. That is tough work and probably the most time consuming part of any test strategy — but ultimately if you want control over your testing all the way through the process it will be unavoidable. It can also expose risks if the test data does not match your live data. Unit testing alone is never a replacement for user acceptance testing.

If you can avoid using direct-by-path access methods to your data, the process of creating and populating test files can mirror that of your subroutine fakes. This typically involves creating and tearing down temporary test files and replacing the file pointers in the MD or VOC in the same way as the catalog pointers for subroutine fakes. These do not necessarily need to be the same size as the live files and if you need to populate these, the method needs to be as convenient and reproducible as possible. Using UI scripting to run data entry screens, calling refactored routines with test data from spreadsheets or external sources to build the data may be an unavoidable solution for complex data but again creates dependencies for the tests and therefore discouraged.

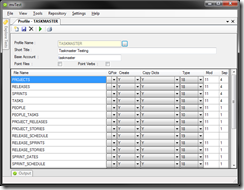

mvTest manages file fakes using a profile manager to specify the new build requirements that can be attached to a single test or to a batch (fig. 1). For each of these it creates a new file of the appropriate file type under a unique name and then creates the VOC or MD pointer under the real file name through which to access it. This is a safety feature and makes sure that there are no old pointers left lying around in the account that might be pointing at shared (or even live) data. Dictionaries are populated from the live files, any secondary indices are created until the end of the test when temporary files are removed and original file pointers restored. Do remember if you adopt a similar scheme for your own testing that some databases will not delete files that are open in named common so you need to consider closing files that would normally remain open for the duration of the application.

Fig. 1 mvTest Profile

Separating Concerns

What about other dependencies — those on devices, spooler, data flows, or user interfaces? Again you can leverage the same concepts of isolating just what it is that you need to test. Let's go back to the order submission and suppose that it produces a document for the invoice. How do you go about testing that in your unit tests?

If you are producing the document as plain text or PCL directly from the database spooler, you could capture and read the resulting file and interrogate it just like any other content. Writing comparison routines that strip out time-dependent or data-dependent values from text based reports is something I have done for systems migrations in the past, if sometimes complex to define. But if you are using a high end document engine like mvPDF, that is a different matter. The document may be protected, encrypted, certainly compressed and possibly have digital signatures and other assets that make parsing it not straight forward. What we are looking at is the need to apply the principle known as separation of concerns.

From the testing perspective we can consider that a document is composed of two things — the data and the presentation. For the presentation you may need visual confirmation at the user acceptance testing stage — a good technique for CI testing is to simply print masters on acetate and overlay the newly created documents for quick checking.

For the purpose of automated unit testing, the focus instead rests on the information that will be rendered. Hopefully your legacy application does not contain lots of programs stating "print 20 spaces, then this value." If so, they are ripe for refactoring anyway. At the very least you should be supplying data to a print template so that elements such as positioning and styling are separated from the production of the data to fill it. It does not need to be as sophisticated as an mvPDF report or merge form, but just a set of instructions to state where different data should go and how it should repeat. Then the inevitable user changes are much easier to accommodate. If even that is not possible, you can still split the print production into a separate routine and feed it the data to render, so that you can then fake the production routine for your unit tests. In all these cases, what you need to test is the content and not the presentation of the output. What is more important, that the invoice lines up? Or that it shows the right figures?

The same is true of data flows. Do your routines under test care where the data originated if it can be passed in the same format and volumes? Feeding in test data from a spreadsheet or CSV file is no different than feeding it from a hand held scanner or message queue but is quicker to repeat and requires far less setup. That does not mean that you can avoid separately testing those interfaces also, probably in the acceptance phases, but the rules around code isolation mean that you do not need to run them for all the data combinations demanded by your unit tests. Consider encapsulating and faking socket operations, remote database calls, or anything else that brings data in or out of your area of immediate concern.

UI Dependencies

User Interface dependencies are probably the most complex pieces to untangle from a legacy application. As with printing, the best long term option is the separate these out so that your business code is segregated from the user interface, and presented to a screen driver or common routines for input, navigation and display of data. These routines can be augmented by standard validations — testing for data format, ranges, lists of values, file relations and like. Hand coding screen activity has always been a waste of time.

If you are running with GUI or web interfaces then by definition you have already split out the presentation logic, and should use the appropriate platform tools like Selenium, Jasmine, or WatIn to test the UI separately from the MultiValue logic behind it. Testing through the UI is always limiting as you are bound to miss unassigned variable messages and other errors swallowed by the middleware and working at that remove makes it more difficult to isolate issues when found. It is always better to test the logic first and then develop the UI over the top of the verified code.

For a legacy system where it may be simply unaffordable to refactor the code in this way, or may take considerable time to achieve, the simplest UI tests may be no more than a series of DATA statements or, for the brave, a PROC that can stack the values to fill the various input statements to drive an application. There are limitations on this approach, not least the fact that low level key-based input routines may not work with DATA stacked input, handling function keys is difficult and these tests are difficult to maintain. If you have a common input subroutine in place of INPUT statements in your code, that gives you an option to read inputs from a script but that of course will not work for any system generated messages (Press any key to continue, for example). Test routines that rely on the DATA stack also rarely handle exceptions well — you can drive an entry screen to simulate order entry, but what happens when the system reports that the item is now out of stock?

A better option is to invest in or code up a test scripting product that can drive a TELNET or SSH connection and simulate terminal activity and one that can adapt to handle exceptions. This avoids the limitations of DATA stacked input by fully simulating the user, and it can also offer the benefits of being able to read the screen, much as a user would, to validate what is displayed.

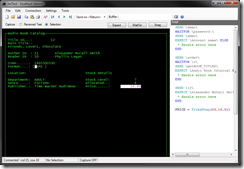

mvTest has built in support for TELNET, SSH and TELNET/SSL operations that run as a second connection beside the main test connection (fig. 2). These scripts can send input and function keys, can examine the content of the screen or output stream, can wait for and branch on specific messages appearing and can gather input from external sources to drive tests through the terminal connection. At the same time, the main connection can perform database-level checks to make sure that what is entered on screen finds its way into the database or to set up the preconditions before running a UI test: all written as part of the same script so that it is easy to see both sides of the operation. Other telnet scripting products are available but lack the deep reach into the database.

Fig. 2 UI Script Recorder