Automated Testing Part 3: Integration Testing

In the previous article I introduced the subjects of unit testing and test driven development. In this next piece we move on to the next phase in automated testing — integration testing. By now you have completed your lovingly crafted and fully test covered new code unit. Before you release it into the wild, you need to make sure that it will play nicely with the other features.

Integration testing primarily seeks to ensure that:

- New or amended code does not disrupt the code base.

- The code operates in context.

- The code can be safely deployed.

Code Disruption

In his excellent book Joel on Software, Joel Spolsky describes the Daily Build undertaken when he was in charge of Excel development at Microsoft. The purpose was to ensure that all necessary changes were checked in and functional at the end of the day, and the punishment for breaking the build was the shame and rightful opprobrium from ones' peers and the penalty of babysitting the next builds (until the next culprit took over). There was a serious side to this — at any point they knew they could release a build. Agile practices take a similar tack today.

In the MultiValue world we do not have large executables linked from multiple sources, in the way of a Java or C# project. Nor do we have any metadata that can be examined to ensure that libraries will expose the right method signatures. Instead, we build separate units in the forms of programs, subroutines, external functions, GCI routines, PROCs, paragraphs, and other assets that cooperate to form a solution. These are only discovered at run time, and so the only ways to ensure that the system will remain undamaged following a code release are through a mixture of static code analysis and exercising the system.

Static code analysis can take you part of the way. In mvTest today, and before as part of a pre-compiler at my previous work, I include a simple code checker that can spot obvious anomalies: mismatches in the number of arguments on a subroutine call or in declared sizes of common blocks, subroutines that cannot be found, and warnings over variable name transpositions and casing that might not be caught by the compiler. But there are limits on what a code check can reasonably deliver, since indirect subroutine calls (Call @Name), passed file variables, and various dynamic calls (creating and opening Q pointers, generating selection statements etc.) can only really be caught at runtime. So a code checker is really a handy hint, a first port of call, and not something to rely upon. Exercising the system through workflow testing is the only safe solution.

Coding Context

The focus of unit testing is deliberately kept very narrow to ensure tight red/green/refactor cycles that can be quickly repeated during active development and test the low level behaviour out of context ("build the code right"). Once the code has passed its unit testing, we can widen our gaze to include those workflows and scenarios in which the code is destined to play it part ("build the right code"). Unit tests can typically be executed in any order but for integration testing we need to think in terms of sequences.

A typical example of such a work flow can be found in a support system website I recently developed for a software house. This allowed end users to log calls and to have them approved or rejected by their supervisors before being passed to the support team. Having developed the back end of the application as a series of UniVerse Basic subroutines, each with its own unit test script, the next stage involved sketching these out into a number of workflows, for example:

- User logs a request -> request rejected -> email sent -> request removed.

- User logs request -> request accepted -> email sent -> support notified -> diary updated.

- Additional information requested -> user supplies information -> support notified.

- Additional information requested -> user uploads attachment -> support notified.

- User searches for request -> request retrieved -> user closes call -> support notified.

You may notice that these are very similar to acceptance criteria and document how the system should operate in the same way that unit tests should document how the code works.

Most testing frameworks have some concept of a sequenced test, whether scripted or recorded from user activities. In Visual Studio you can create an Ordered Test that batches together a set of tests in a defined order to simulate a workflow. More sophisticated automated acceptance testing tools like FitNesse can match a test to an acceptance specification. For mvTest I discovered that I needed to take the sequence concept a stage further to allow for the volume and concurrency testing need to exercise a MultiValue application, and so the individual tests can be arranged into batches that operate in sequence, and those batches can then be arranged into test runs. Test runs can be kicked off in parallel with the batches executed in a randomized order for a set number of iterations.

Testing for a retail group approaching the New Year sales, the test plan involved creating a minimum number of orders, product lookups, deliveries, returns and so forth during each two hour slot that represented (and far exceeded) the peak operations of their stores, all by driving the application simulating user activity through workflows. To make it appear more realistic, natural timings for entering data were added to replace the normal high speed automated operations.

This is where a scripted testing language is important rather than a simple rig that pushes data and expects responses: scripting allows the tests to expect and handle exceptions such as locking or thresholds: if you are pounding a sales application through a test rig, you should expect to run out of stock or delivery slots at some point and for both your system and the test scripts to handle this appropriately.

Code Deployment

There is no point in testing your code on a development system only to watch it to break once it has been released. Integration testing ideally takes place on a dedicated SIT or a like-live system that allows you to ensure that the code works in context after it has been deployed. Integration testing is as much about testing the deployment as the build.

A prerequisite for any integration testing must be a smooth, predictable, and completely reproducible deployment model that requires little to no manual intervention. If your deployment requires you to create or copy assets by hand, you cannot predictably ensure that your deployment to live will succeed and there is no point in considering integration testing until that has been resolved. In this context, code involves the widest definition of source — not only your programs but every asset required to ensure the running of your application.

Here is a simple question you can ask yourself (quietly, with a drink close to hand). If you recompiled all your code tomorrow are you confident it would still work the same? If you lost all your dictionaries, could you simply reinstate them? If you redeployed all your setups in order, would you have a working solution?

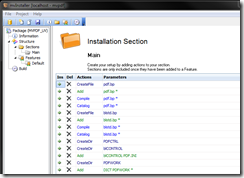

Deployment solutions for MultiValue applications are few, but they do exist. mvInstaller generates packages similar in concept to the msi packages used for Windows setups, and includes code, data and commands to execute to complete the installation (fig. 1). Tellingly, it was the very first thing I wrote on becoming self-employed, as I needed to be certain I could deploy subsequent solutions successfully. Alternatives you should consider are Susan Joslyn's highly regarded lifecycle management product PRC, a well respected tool that covers deployment, auditing, and compliance. And Rocket is adding U2 deployment to their Aldon product.

Fig. 1

Continuous Integration (CI)

Integration testing is a broad term that is variously applied to aspect of smoke testing, workflow testing, systems testing through to automated acceptance testing. What they all have in common is an aim to complete full code coverage in a hands-off manner, to complement user acceptance testing. Unfortunately, most of the leading MultiValue platforms cannot return code coverage statistics despite being implemented as run machines, a fact that severely restricts moves to ascertain the effectiveness of any test plans.

An extreme form of coverage is Continuous Integration (CI) testing following on from the daily or continuous builds mentioned above. In a CI environment, changes are automatically deployed to a black box server that runs through the integration tests on either a continual basis or automatically triggered following each new deployment. A CI server notifies the development team of any errors but stays quiet about successes, so that developers can focus on fixing, backing out, and redeploying.

UI Driven Testing

In the support system scenarios above I am testing the backend logic. By designing the application so that there is a clear separation between the UI and the business logic I can easily test these in isolation to localize any errors. But for legacy systems and those built using some 4GLs, that is not always possible.

A key decision in the design of mvTest was therefore to allow UI testing alongside the regular scripting and to have this operate as part of the same scripts. So the test runners include a virtual terminal emulator that can open Telnet, SSL, or SSH connections to the database and run the application in the same way as a terminal user. This would be possible using other standard telnet scripting tools that can also check the status of the screen and report on what is being displayed, but at the same time I wanted to be free to handle any error messages or lock conditions and to directly access the database through a regular connection to set up the initial data or to check what those screens have actually performed at the database level. That meant running the UI testing through a tool that was itself MultiValue-aware.

Most MultiValue testing is founded on some form of data entry, enquiry, or data flow, and so it was also important to add support for randomly creating or selecting test data: a date between two values, a key from another file, a line from an Excel spreadsheet.

So a typical example might go like this: run an order entry screen over Telnet, select a customer by lookup, enter a number of parts and quantities, check the totals displayed, file the screen, grab the order number reported, then from the other connection read that order from the database and check it has all the required data (fig. 2).

Fig. 2

But what of other interfaces and UIs? Integration testing should ideally be end-to-end, exercising product feeds, web and desktop UIs and all the paraphernalia that attends a modern MultiValue solution. How far that is possible depends to a large extent on the mix (and cost) of technologies and the involvement of teams responsible for those, and the availability of appropriate testing tools to target those platforms.

Most environments today support open source testing tools such as Project White for Windows Forms and WPF, and WatiN or Selenium for web browser applications. All of these work through the UI and so run at a step removed from the database to automate testing through their respective target UIs. This limits their reach to those things that can be visible or instigated through the UI but with that limitation understood should form part of your arsenal.

Data feeds, message queues, and third party participants may always lie outside the practical scope of your integration testing and will thus need to be simulated unless you can dive directly into the code behind them. As with all such matters, how easily that can be done comes down to your application design, how far you have separated out the dependencies from the code business logic, and how willing you are to refactor your legacy code if not.

In the next article I will be looking at refactoring and some of the lessons learned in applying automated testing to legacy code.