Data Replication

If I were to ask the question: "How do you define what is meant by data security?" I'm willing to place a bet that everyone reading this would initially think of protecting data from unauthorized access, or a more commonly used word, a 'hack.'

While that is a correct answer, data security is so much more than that. Protecting against 'hacks' is extremely important, and in some cases legally required, but maintaining access to data for authorized persons is equally important. It is another form of a data security that must be considered.

Hopefully, we all take backups of our databases that can be restored in the event of a failure. Unfortunately, this can be a slow way to recover a system. And it can lead to frustration from users who cannot perform their tasks without having access to your data. We need more than just backups.

So Just What is Data Replication?

Well, "it's the frequent electronic copying of data from a database on one computer or server to a database on another." Furthermore, if it's truly replication, it's done behind the scenes without you having to worry about it, in as near real-time as possible.

In this article I'm going to explore three options that are available in Reality today that can assist you with data replication: Failsafe, RealityDR, and Fast Backup. Before we start on these, I have to share a word of caution in respect to relying on third-party snapshot technologies such as those that are part of VM infrastructure deployment for replication. Replication at this level can result in an incompletely replicated database as a Pick system can take several writes to update an item (e.g. out-of-group item) and the snapshot could, in theory, get taken between these writes, rendering the replicated database corrupted.

Fast Backup

That brings me to our first option, Fast Backup. While technically not a replication service, you can use this tool to take a valid snapshot and restore it quickly onto a second database. Reality's Fast Backup capability allows gigabytes of data to be backed up in just a few minutes and restored to another database just as quickly; far faster than traditional MultiValue saves. The key part of this is that it does have the ability to take a clean, known-state backup that will be guaranteed to be intact. It also boasts the ability to suspend updates to a database so that you can use third-party tools to replicate the database safely, eliminating the multi-write issue highlighted above. You can achieve this with the command realdump -fs to freeze updates (and commit to disk) and realdump -u to once again allow updates. This command line utility can be embedded into third-party backup scripts to ensure that a safe snapshot or checkpoint is taken.

A close look at the Failsafe and RealityDR products show some similarities; they are true transaction-based systems and replicate the data in as near real-time as we can possibly get it, depending on the sites' networking. A true transaction-based system is one that records items that have changed in a transaction log file. It only records the actual items that have changed and not entire file groups or disk sectors where the change has occurred. This potentially saves huge amounts of data-writes as well as affording the users transaction commit, abort, and rollback capabilities.

Failsafe

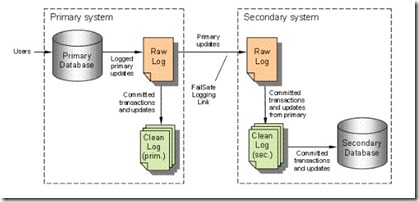

Failsafe is integrated into Reality and consists of a primary (the live server) and a secondary (the backup server). This feature is available across all of Reality's supported platforms. Users login to the primary server and perform their usual daily tasks. All updates to files are correctly flagged for transaction logging and are replicated to the secondary server. The update operation is also recorded in a Rawlog partition or file. This holds all before-and-after images of the items.

Once the update(s) are committed, the 'after' image is transferred to a transaction clean log file (commonly called a CLOG file) while simultaneously being transferred to the secondary server to ensure that the update is replicated. Certain operations do not result in an item being transferred but instead result in an operational record being logged, such as a DELETE or CLEAR-FILE operation. Index updates are not transferred because the indexes will automatically be updated on the secondary server upon replay of the transferred item onto that database. [Figure 1] explains this further.

Fig. 1

Configuration and management of Failsafe are performed via the utility TLMENU . In the event of a primary failure or planned maintenance of the primary system, the administrator would switch the networking for users to the secondary server. They'd use TLMENU to swap the flags, making the secondary into the primary. The administrator can tend to the now isolated old primary server, make the necessary repairs or maintenance, and then once again use TLMENU to re-synchronize the servers. All the while, the users remain logged-in, performing their daily tasks.

As the servers are typically identical, but not necessarily so, the administrator may choose to leave the users on the new primary. This is all done without loss of transaction integrity and with minimum loss of service availability to users.

Depending on the throughput of the database, tens of millions of items can be updated each and every day. This, in turn, can generate significant network traffic between the primary and secondary server. For this reason, it's recommended that the two systems have an isolated network between them which is used purely for the failsafe traffic. This would lead to the best performance, with updates being replicated in real-time on the secondary server.

Failsafe can operate over the general user population's network. This should be configured as a backup route for traffic should the dedicated route fail. Due to the network traffic that this will create, it's recommended that these servers be in close physical proximity to each other.

While I'm on the subject of performance, it's worth mentioning that you should carefully consider the placement of the Rawlog and transaction logs (CLOG's) on a server as you would wish to try to avoid conflicts at the disk level between these and the actual database(s). If at all possible, I recommend having the database, Rawlog , and CLOG files all on separate disk spindles to gain the best performance at the hardware level. Again, this is dependent on the application, since lighter applications might not need the extra speed advantage.

The utility tlmenu is extremely comprehensive and impossible to document in this article. I would highly recommend that you refer to the user guides to assist in navigating through the options.

RealityDR

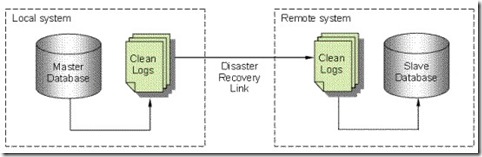

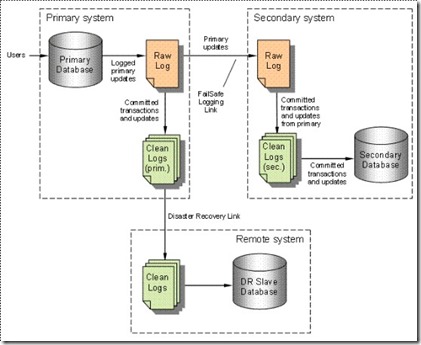

Reality Disaster Recovery provides a further level of replication capability for Reality databases, including off-site replication of a standalone system [Figure 2] or an off-site replicated system for a fail-safe system [Figure 3]. This further strengthens the security of your data.

Fig. 2

Fig. 3

Increasing the resilience of a fail-safe system gives the added benefits that if the secondary is taken offline, you would still remain protected against a primary failure. Furthermore, in a true disaster situation where both primary and secondary systems are unavailable, an off-site backup system ensures that your data is secure.

The above diagram [Figure 2] shows a typical stand-alone RealityDR system while the diagram below it [Figure 3] details RealityDR together with Failsafe.

RealityDR is designed to operate over a loosely coupled network, i.e. the server is physically located some distance away such as in a Disaster Recover Center and/or where the network link may not be as reliable or less performant. The main server takes the role of the master with the slave system (RealityDR) kept up to date by means of the CLOG files copied from the master. If the slave system becomes unavailable for some reason, the master (the one users are connected to) continues as before; transfer of the clean logs will resume when the slave again becomes available. All of the operations are managed via the utility tlmenu . Activation of the slave system, should this become necessary, is a manual step.

It is good to have multiple options for your data security because business needs are always changing.